John Goerzen: The PC & Internet Revolution in Rural America

Inspired by several others (such as Alex Schroeder s post and Szcze uja s prompt), as well as a desire to get this down for my kids, I figure it s time to write a bit about living through the PC and Internet revolution where I did: outside a tiny town in rural Kansas. And, as I ve been back in that same area for the past 15 years, I reflect some on the challenges that continue to play out.

Although the stories from the others were primarily about getting online, I want to start by setting some background. Those of you that didn t grow up in the same era as I did probably never realized that a typical business PC setup might cost $10,000 in today s dollars, for instance. So let me start with the background.

Nothing was easy

This story begins in the 1980s. Somewhere around my Kindergarten year of school, around 1985, my parents bought a TRS-80 Color Computer 2 (aka CoCo II). It had 64K of RAM and used a TV for display and sound.

This got you the computer. It didn t get you any disk drive or anything, no joysticks (required by a number of games). So whenever the system powered down, or it hung and you had to power cycle it a frequent event you d lose whatever you were doing and would have to re-enter the program, literally by typing it in.

The floppy drive for the CoCo II cost more than the computer, and it was quite common for people to buy the computer first and then the floppy drive later when they d saved up the money for that.

I particularly want to mention that computers then didn t come with a modem. What would be like buying a laptop or a tablet without wifi today. A modem, which I ll talk about in a bit, was another expensive accessory. To cobble together a system in the 80s that was capable of talking to others with persistent storage (floppy, or hard drive), screen, keyboard, and modem would be quite expensive. Adjusted for inflation, if you re talking a PC-style device (a clone of the IBM PC that ran DOS), this would easily be more expensive than the Macbook Pros of today.

Few people back in the 80s had a computer at home. And the portion of those that had even the capability to get online in a meaningful way was even smaller.

Eventually my parents bought a PC clone with 640K RAM and dual floppy drives. This was primarily used for my mom s work, but I did my best to take it over whenever possible. It ran DOS and, despite its monochrome screen, was generally a more capable machine than the CoCo II. For instance, it supported lowercase. (I m not even kidding; the CoCo II pretty much didn t.) A while later, they purchased a 32MB hard drive for it what luxury!

Just getting a machine to work wasn t easy. Say you d bought a PC, and then bought a hard drive, and a modem. You didn t just plug in the hard drive and it would work. You would have to fight it every step of the way. The BIOS and DOS partition tables of the day used a cylinder/head/sector method of addressing the drive, and various parts of that those addresses had too few bits to work with the big drives of the day above 20MB. So you would have to lie to the BIOS and fdisk in various ways, and sort of work out how to do it for each drive. For each peripheral serial port, sound card (in later years), etc., you d have to set jumpers for DMA and IRQs, hoping not to conflict with anything already in the system. Perhaps you can now start to see why USB and PCI were so welcomed.

Nothing was easy

This story begins in the 1980s. Somewhere around my Kindergarten year of school, around 1985, my parents bought a TRS-80 Color Computer 2 (aka CoCo II). It had 64K of RAM and used a TV for display and sound.

This got you the computer. It didn t get you any disk drive or anything, no joysticks (required by a number of games). So whenever the system powered down, or it hung and you had to power cycle it a frequent event you d lose whatever you were doing and would have to re-enter the program, literally by typing it in.

The floppy drive for the CoCo II cost more than the computer, and it was quite common for people to buy the computer first and then the floppy drive later when they d saved up the money for that.

I particularly want to mention that computers then didn t come with a modem. What would be like buying a laptop or a tablet without wifi today. A modem, which I ll talk about in a bit, was another expensive accessory. To cobble together a system in the 80s that was capable of talking to others with persistent storage (floppy, or hard drive), screen, keyboard, and modem would be quite expensive. Adjusted for inflation, if you re talking a PC-style device (a clone of the IBM PC that ran DOS), this would easily be more expensive than the Macbook Pros of today.

Few people back in the 80s had a computer at home. And the portion of those that had even the capability to get online in a meaningful way was even smaller.

Eventually my parents bought a PC clone with 640K RAM and dual floppy drives. This was primarily used for my mom s work, but I did my best to take it over whenever possible. It ran DOS and, despite its monochrome screen, was generally a more capable machine than the CoCo II. For instance, it supported lowercase. (I m not even kidding; the CoCo II pretty much didn t.) A while later, they purchased a 32MB hard drive for it what luxury!

Just getting a machine to work wasn t easy. Say you d bought a PC, and then bought a hard drive, and a modem. You didn t just plug in the hard drive and it would work. You would have to fight it every step of the way. The BIOS and DOS partition tables of the day used a cylinder/head/sector method of addressing the drive, and various parts of that those addresses had too few bits to work with the big drives of the day above 20MB. So you would have to lie to the BIOS and fdisk in various ways, and sort of work out how to do it for each drive. For each peripheral serial port, sound card (in later years), etc., you d have to set jumpers for DMA and IRQs, hoping not to conflict with anything already in the system. Perhaps you can now start to see why USB and PCI were so welcomed.

Sharing and finding resources

Despite the two computers in our home, it wasn t as if software written on one machine just ran on another. A lot of software for PC clones assumed a CGA color display. The monochrome HGC in our PC wasn t particularly compatible. You could find a TSR program to emulate the CGA on the HGC, but it wasn t particularly stable, and there s only so much you can do when a program that assumes color displays on a monitor that can only show black, dark amber, or light amber.

So I d periodically get to use other computers most commonly at an office in the evening when it wasn t being used.

There were some local computer clubs that my dad took me to periodically. Software was swapped back then; disks copied, shareware exchanged, and so forth. For me, at least, there was no online to download software from, and selling software over the Internet wasn t a thing at all.

Three Different Worlds

There were sort of three different worlds of computing experience in the 80s:

- Home users. Initially using a wide variety of software from Apple, Commodore, Tandy/RadioShack, etc., but eventually coming to be mostly dominated by IBM PC clones

- Small and mid-sized business users. Some of them had larger minicomputers or small mainframes, but most that I had contact with by the early 90s were standardized on DOS-based PCs. More advanced ones had a network running Netware, most commonly. Networking hardware and software was generally too expensive for home users to use in the early days.

- Universities and large institutions. These are the places that had the mainframes, the earliest implementations of TCP/IP, the earliest users of UUCP, and so forth.

The difference between the home computing experience and the large institution experience were vast. Not only in terms of dollars the large institution hardware could easily cost anywhere from tens of thousands to millions of dollars but also in terms of sheer resources required (large rooms, enormous power circuits, support staff, etc). Nothing was in common between them; not operating systems, not software, not experience. I was never much aware of the third category until the differences started to collapse in the mid-90s, and even then I only was exposed to it once the collapse was well underway.

You might say to me, Well, Google certainly isn t running what I m running at home! And, yes of course, it s different. But fundamentally, most large datacenters are running on x86_64 hardware, with Linux as the operating system, and a TCP/IP network. It s a different scale, obviously, but at a fundamental level, the hardware and operating system stack are pretty similar to what you can readily run at home. Back in the 80s and 90s, this wasn t the case. TCP/IP wasn t even available for DOS or Windows until much later, and when it was, it was a clunky beast that was difficult.

One of the things Kevin Driscoll highlights in his book called Modem World see my short post about it is that the history of the Internet we usually receive is focused on case 3: the large institutions. In reality, the Internet was and is literally a network of networks. Gateways to and from Internet existed from all three kinds of users for years, and while TCP/IP ultimately won the battle of the internetworking protocol, the other two streams of users also shaped the Internet as we now know it. Like many, I had no access to the large institution networks, but as I ve been reflecting on my experiences, I ve found a new appreciation for the way that those of us that grew up with primarily home PCs shaped the evolution of today s online world also.

An Era of Scarcity

I should take a moment to comment about the cost of software back then. A newspaper article from 1985 comments that WordPerfect, then the most powerful word processing program, sold for $495 (or $219 if you could score a mail order discount). That s $1360/$600 in 2022 money. Other popular software, such as Lotus 1-2-3, was up there as well. If you were to buy a new PC clone in the mid to late 80s, it would often cost $2000 in 1980s dollars. Now add a printer a low-end dot matrix for $300 or a laser for $1500 or even more. A modem: another $300. So the basic system would be $3600, or $9900 in 2022 dollars. If you wanted a nice printer, you re now pushing well over $10,000 in 2022 dollars.

You start to see one barrier here, and also why things like shareware and piracy if it was indeed even recognized as such were common in those days.

So you can see, from a home computer setup (TRS-80, Commodore C64, Apple ][, etc) to a business-class PC setup was an order of magnitude increase in cost. From there to the high-end minis/mainframes was another order of magnitude (at least!) increase. Eventually there was price pressure on the higher end and things all got better, which is probably why the non-DOS PCs lasted until the early 90s.

Increasing Capabilities

My first exposure to computers in school was in the 4th grade, when I would have been about 9. There was a single Apple ][ machine in that room. I primarily remember playing Oregon Trail on it. The next year, the school added a computer lab. Remember, this is a small rural area, so each graduating class might have about 25 people in it; this lab was shared by everyone in the K-8 building. It was full of some flavor of IBM PS/2 machines running DOS and Netware. There was a dedicated computer teacher too, though I think she was a regular teacher that was given somewhat minimal training on computers. We were going to learn typing that year, but I did so well on the very first typing program that we soon worked out that I could do programming instead. I started going to school early these machines were far more powerful than the XT at home and worked on programming projects there.

Eventually my parents bought me a Gateway 486SX/25 with a VGA monitor and hard drive. Wow! This was a whole different world. It may have come with Windows 3.0 or 3.1 on it, but I mainly remember running OS/2 on that machine. More on that below.

Programming

That CoCo II came with a BASIC interpreter in ROM. It came with a large manual, which served as a BASIC tutorial as well. The BASIC interpreter was also the shell, so literally you could not use the computer without at least a bit of BASIC.

Once I had access to a DOS machine, it also had a basic interpreter: GW-BASIC. There was a fair bit of software written in BASIC at the time, but most of the more advanced software wasn t. I wondered how these .EXE and .COM programs were written. I could find vague references to DEBUG.EXE, assemblers, and such. But it wasn t until I got a copy of Turbo Pascal that I was able to do that sort of thing myself. Eventually I got Borland C++ and taught myself C as well. A few years later, I wanted to try writing GUI programs for Windows, and bought Watcom C++ much cheaper than the competition, and it could target Windows, DOS (and I think even OS/2).

Notice that, aside from BASIC, none of this was free, and none of it was bundled. You couldn t just download a C compiler, or Python interpreter, or whatnot back then. You had to pay for the ability to write any kind of serious code on the computer you already owned.

The Microsoft Domination

Microsoft came to dominate the PC landscape, and then even the computing landscape as a whole. IBM very quickly lost control over the hardware side of PCs as Compaq and others made clones, but Microsoft has managed in varying degrees even to this day to keep a stranglehold on the software, and especially the operating system, side. Yes, there was occasional talk of things like DR-DOS, but by and large the dominant platform came to be the PC, and if you had a PC, you ran DOS (and later Windows) from Microsoft.

For awhile, it looked like IBM was going to challenge Microsoft on the operating system front; they had OS/2, and when I switched to it sometime around the version 2.1 era in 1993, it was unquestionably more advanced technically than the consumer-grade Windows from Microsoft at the time. It had Internet support baked in, could run most DOS and Windows programs, and had introduced a replacement for the by-then terrible FAT filesystem: HPFS, in 1988. Microsoft wouldn t introduce a better filesystem for its consumer operating systems until Windows XP in 2001, 13 years later. But more on that story later.

Free Software, Shareware, and Commercial Software

I ve covered the high cost of software already. Obviously $500 software wasn t going to sell in the home market. So what did we have?

Mainly, these things:

- Public domain software. It was free to use, and if implemented in BASIC, probably had source code with it too.

- Shareware

- Commercial software (some of it from small publishers was a lot cheaper than $500)

Let s talk about shareware. The idea with shareware was that a company would release a useful program, sometimes limited. You were encouraged to register , or pay for, it if you liked it and used it. And, regardless of whether you registered it or not, were told please copy! Sometimes shareware was fully functional, and registering it got you nothing more than printed manuals and an easy conscience (guilt trips for not registering weren t necessarily very subtle). Sometimes unregistered shareware would have a nag screen a delay of a few seconds while they told you to register. Sometimes they d be limited in some way; you d get more features if you registered. With games, it was popular to have a trilogy, and release the first episode inevitably ending with a cliffhanger as shareware, and the subsequent episodes would require registration. In any event, a lot of software people used in the 80s and 90s was shareware. Also pirated commercial software, though in the earlier days of computing, I think some people didn t even know the difference.

Notice what s missing: Free Software / FLOSS in the Richard Stallman sense of the word. Stallman lived in the big institution world after all, he worked at MIT and what he was doing with the Free Software Foundation and GNU project beginning in 1983 never really filtered into the DOS/Windows world at the time. I had no awareness of it even existing until into the 90s, when I first started getting some hints of it as a port of gcc became available for OS/2. The Internet was what really brought this home, but I m getting ahead of myself.

I want to say again: FLOSS never really entered the DOS and Windows 3.x ecosystems. You d see it make a few inroads here and there in later versions of Windows, and moreso now that Microsoft has been sort of forced to accept it, but still, reflect on its legacy. What is the software market like in Windows compared to Linux, even today?

Now it is, finally, time to talk about connectivity!

Getting On-Line

What does it even mean to get on line? Certainly not connecting to a wifi access point. The answer is, unsurprisingly, complex. But for everyone except the large institutional users, it begins with a telephone.

The telephone system

By the 80s, there was one communication network that already reached into nearly every home in America: the phone system. Virtually every household (note I don t say every person) was uniquely identified by a 10-digit phone number. You could, at least in theory, call up virtually any other phone in the country and be connected in less than a minute.

But I ve got to talk about cost. The way things worked in the USA, you paid a monthly fee for a phone line. Included in that monthly fee was unlimited local calling. What is a local call? That was an extremely complex question. Generally it meant, roughly, calling within your city. But of course, as you deal with things like suburbs and cities growing into each other (eg, the Dallas-Ft. Worth metroplex), things got complicated fast. But let s just say for simplicity you could call others in your city.

What about calling people not in your city? That was long distance , and you paid often hugely by the minute for it. Long distance rates were difficult to figure out, but were generally most expensive during business hours and cheapest at night or on weekends. Prices eventually started to come down when competition was introduced for long distance carriers, but even then you often were stuck with a single carrier for long distance calls outside your city but within your state. Anyhow, let s just leave it at this: local calls were virtually free, and long distance calls were extremely expensive.

Getting a modem

I remember getting a modem that ran at either 1200bps or 2400bps. Either way, quite slow; you could often read even plain text faster than the modem could display it. But what was a modem?

A modem hooked up to a computer with a serial cable, and to the phone system. By the time I got one, modems could automatically dial and answer. You would send a command like ATDT5551212 and it would dial 555-1212. Modems had speakers, because often things wouldn t work right, and the telephone system was oriented around speech, so you could hear what was happening. You d hear it wait for dial tone, then dial, then hopefully the remote end would ring, a modem there would answer, you d hear the screeching of a handshake, and eventually your terminal would say CONNECT 2400. Now your computer was bridged to the other; anything going out your serial port was encoded as sound by your modem and decoded at the other end, and vice-versa.

But what, exactly, was the other end?

It might have been another person at their computer. Turn on local echo, and you can see what they did. Maybe you d send files to each other. But in my case, the answer was different: PC Magazine.

PC Magazine and CompuServe

Starting around 1986 (so I would have been about 6 years old), I got to read PC Magazine. My dad would bring copies that were being discarded at his office home for me to read, and I think eventually bought me a subscription directly. This was not just a standard magazine; it ran something like 350-400 pages an issue, and came out every other week. This thing was a monster. It had reviews of hardware and software, descriptions of upcoming technologies, pages and pages of ads (that often had some degree of being informative to them). And they had sections on programming. Many issues would talk about BASIC or Pascal programming, and there d be a utility in most issues. What do I mean by a utility in most issues ? Did they include a floppy disk with software?

No, of course not. There was a literal program listing printed in the magazine. If you wanted the utility, you had to type it in. And a lot of them were written in assembler, so you had to have an assembler. An assembler, of course, was not free and I didn t have one. Or maybe they wrote it in Microsoft C, and I had Borland C, and (of course) they weren t compatible. Sometimes they would list the program sort of in binary: line after line of a BASIC program, with lines like 64, 193, 253, 0, 53, 0, 87 that you would type in for hours, hopefully correctly. Running the BASIC program would, if you got it correct, emit a .COM file that you could then run. They did have a rudimentary checksum system built in, but it wasn t even a CRC, so something like swapping two numbers you d never notice except when the program would mysteriously hang.

Eventually they teamed up with CompuServe to offer a limited slice of CompuServe for the purpose of downloading PC Magazine utilities. This was called PC MagNet. I am foggy on the details, but I believe that for a time you could connect to the limited PC MagNet part of CompuServe for free (after the cost of the long-distance call, that is) rather than paying for CompuServe itself (because, OF COURSE, that also charged you per the minute.) So in the early days, I would get special permission from my parents to place a long distance call, and after some nerve-wracking minutes in which we were aware every minute was racking up charges, I could navigate the menus, download what I wanted, and log off immediately.

I still, incidentally, mourn what PC Magazine became. As with computing generally, it followed the mass market. It lost its deep technical chops, cut its programming columns, stopped talking about things like how SCSI worked, and so forth. By the time it stopped printing in 2009, it was no longer a square-bound 400-page beheamoth, but rather looked more like a copy of Newsweek, but with less depth.

Continuing with CompuServe

CompuServe was a much larger service than just PC MagNet. Eventually, our family got a subscription. It was still an expensive and scarce resource; I d call it only after hours when the long-distance rates were cheapest. Everyone had a numerical username separated by commas; mine was 71510,1421. CompuServe had forums, and files. Eventually I would use TapCIS to queue up things I wanted to do offline, to minimize phone usage online.

CompuServe eventually added a gateway to the Internet. For the sum of somewhere around $1 a message, you could send or receive an email from someone with an Internet email address! I remember the thrill of one time, as a kid of probably 11 years, sending a message to one of the editors of PC Magazine and getting a kind, if brief, reply back!

But inevitably I had

The Godzilla Phone Bill

Yes, one month I became lax in tracking my time online. I ran up my parents phone bill. I don t remember how high, but I remember it was hundreds of dollars, a hefty sum at the time. As I watched Jason Scott s BBS Documentary, I realized how common an experience this was. I think this was the end of CompuServe for me for awhile.

Toll-Free Numbers

I lived near a town with a population of 500. Not even IN town, but near town. The calling area included another town with a population of maybe 1500, so all told, there were maybe 2000 people total I could talk to with a local call though far fewer numbers, because remember, telephones were allocated by the household. There was, as far as I know, zero modems that were a local call (aside from one that belonged to a friend I met in around 1992). So basically everything was long-distance.

But there was a special feature of the telephone network: toll-free numbers. Normally when calling long-distance, you, the caller, paid the bill. But with a toll-free number, beginning with 1-800, the recipient paid the bill. These numbers almost inevitably belonged to corporations that wanted to make it easy for people to call. Sales and ordering lines, for instance. Some of these companies started to set up modems on toll-free numbers. There were few of these, but they existed, so of course I had to try them!

One of them was a company called PennyWise that sold office supplies. They had a toll-free line you could call with a modem to order stuff. Yes, online ordering before the web! I loved office supplies. And, because I lived far from a big city, if the local K-Mart didn t have it, I probably couldn t get it. Of course, the interface was entirely text, but you could search for products and place orders with the modem. I had loads of fun exploring the system, and actually ordered things from them and probably actually saved money doing so. With the first order they shipped a monster full-color catalog. That thing must have been 500 pages, like the Sears catalogs of the day. Every item had a part number, which streamlined ordering through the modem.

Inbound FAXes

By the 90s, a number of modems became able to send and receive FAXes as well. For those that don t know, a FAX machine was essentially a special modem. It would scan a page and digitally transmit it over the phone system, where it would at least in the early days be printed out in real time (because the machines didn t have the memory to store an entire page as an image). Eventually, PC modems integrated FAX capabilities.

There still wasn t anything useful I could do locally, but there were ways I could get other companies to FAX something to me. I remember two of them.

One was for US Robotics. They had an on demand FAX system. You d call up a toll-free number, which was an automated IVR system. You could navigate through it and select various documents of interest to you: spec sheets and the like. You d key in your FAX number, hang up, and US Robotics would call YOU and FAX you the documents you wanted. Yes! I was talking to a computer (of a sorts) at no cost to me!

The New York Times also ran a service for awhile called TimesFax. Every day, they would FAX out a page or two of summaries of the day s top stories. This was pretty cool in an era in which I had no other way to access anything from the New York Times. I managed to sign up for TimesFax I have no idea how, anymore and for awhile I would get a daily FAX of their top stories. When my family got its first laser printer, I could them even print these FAXes complete with the gothic New York Times masthead. Wow! (OK, so technically I could print it on a dot-matrix printer also, but graphics on a 9-pin dot matrix is a kind of pain that is a whole other article.)

My own phone line

Remember how I discussed that phone lines were allocated per household? This was a problem for a lot of reasons:

- Anybody that tried to call my family while I was using my modem would get a busy signal (unable to complete the call)

- If anybody in the house picked up the phone while I was using it, that would degrade the quality of the ongoing call and either mess up or disconnect the call in progress. In many cases, that could cancel a file transfer (which wasn t necessarily easy or possible to resume), prompting howls of annoyance from me.

- Generally we all had to work around each other

So eventually I found various small jobs and used the money I made to pay for my own phone line and my own long distance costs. Eventually I upgraded to a 28.8Kbps US Robotics Courier modem even! Yes, you heard it right: I got a job and a bank account so I could have a phone line and a faster modem. Uh, isn t that why every teenager gets a job?

Now my local friend and I could call each other freely at least on my end (I can t remember if he had his own phone line too). We could exchange files using HS/Link, which had the added benefit of allowing split-screen chat even while a file transfer is in progress. I m sure we spent hours chatting to each other keyboard-to-keyboard while sharing files with each other.

Technology in Schools

By this point in the story, we re in the late 80s and early 90s. I m still using PC-style OSs at home; OS/2 in the later years of this period, DOS or maybe a bit of Windows in the earlier years. I mentioned that they let me work on programming at school starting in 5th grade. It was soon apparent that I knew more about computers than anybody on staff, and I started getting pulled out of class to help teachers or administrators with vexing school problems. This continued until I graduated from high school, incidentally often to my enjoyment, and the annoyance of one particular teacher who, I must say, I was fine with annoying in this way.

That s not to say that there was institutional support for what I was doing. It was, after all, a small school. Larger schools might have introduced BASIC or maybe Logo in high school. But I had already taught myself BASIC, Pascal, and C by the time I was somewhere around 12 years old. So I wouldn t have had any use for that anyhow.

There were programming contests occasionally held in the area. Schools would send teams. My school didn t really send anybody, but I went as an individual. One of them was run by a local college (but for jr. high or high school students. Years later, I met one of the professors that ran it. He remembered me, and that day, better than I did. The programming contest had problems one could solve in BASIC or Logo. I knew nothing about what to expect going into it, but I had lugged my computer and screen along, and asked him, Can I write my solutions in C? He was, apparently, stunned, but said sure, go for it. I took first place that day, leading to some rather confused teams from much larger schools.

The Netware network that the school had was, as these generally were, itself isolated. There was no link to the Internet or anything like it. Several schools across three local counties eventually invested in a fiber-optic network linking them together. This built a larger, but still closed, network. Its primary purpose was to allow students to be exposed to a wider variety of classes at high schools. Participating schools had an ITV room , outfitted with cameras and mics. So students at any school could take classes offered over ITV at other schools. For instance, only my school taught German classes, so people at any of those participating schools could take German. It was an early Zoom room. But alongside the TV signal, there was enough bandwidth to run some Netware frames. By about 1995 or so, this let one of the schools purchase some CD-ROM software that was made available on a file server and could be accessed by any participating school. Nice! But Netware was mainly about file and printer sharing; there wasn t even a facility like email, at least not on our deployment.

BBSs

My last hop before the Internet was the BBS. A BBS was a computer program, usually ran by a hobbyist like me, on a computer with a modem connected. Callers would call it up, and they d interact with the BBS. Most BBSs had discussion groups like forums and file areas. Some also had games. I, of course, continued to have that most vexing of problems: they were all long-distance.

There were some ways to help with that, chiefly QWK and BlueWave. These, somewhat like TapCIS in the CompuServe days, let me download new message posts for reading offline, and queue up my own messages to send later. QWK and BlueWave didn t help with file downloading, though.

BBSs get networked

BBSs were an interesting thing. You d call up one, and inevitably somewhere in the file area would be a BBS list. Download the BBS list and you ve suddenly got a list of phone numbers to try calling. All of them were long distance, of course. You d try calling them at random and have a success rate of maybe 20%. The other 80% would be defunct; you might get the dreaded this number is no longer in service or the even more dreaded angry human answering the phone (and of course a modem can t talk to a human, so they d just get silence for probably the nth time that week). The phone company cared nothing about BBSs and recycled their numbers just as fast as any others.

To talk to various people, or participate in certain discussion groups, you d have to call specific BBSs. That s annoying enough in the general case, but even more so for someone paying long distance for it all, because it takes a few minutes to establish a connection to a BBS: handshaking, logging in, menu navigation, etc.

But BBSs started talking to each other. The earliest successful such effort was FidoNet, and for the duration of the BBS era, it remained by far the largest. FidoNet was analogous to the UUCP that the institutional users had, but ran on the much cheaper PC hardware. Basically, BBSs that participated in FidoNet would relay email, forum posts, and files between themselves overnight. Eventually, as with UUCP, by hopping through this network, messages could reach around the globe, and forums could have worldwide participation asynchronously, long before they could link to each other directly via the Internet. It was almost entirely volunteer-run.

Running my own BBS

At age 13, I eventually chose to set up my own BBS. It ran on my single phone line, so of course when I was dialing up something else, nobody could dial up me. Not that this was a huge problem; in my town of 500, I probably had a good 1 or 2 regular callers in the beginning.

In the PC era, there was a big difference between a server and a client. Server-class software was expensive and rare. Maybe in later years you had an email client, but an email server would be completely unavailable to you as a home user. But with a BBS, I could effectively run a server. I even ran serial lines in our house so that the BBS could be connected from other rooms! Since I was running OS/2, the BBS didn t tie up the computer; I could continue using it for other things.

FidoNet had an Internet email gateway. This one, unlike CompuServe s, was free. Once I had a BBS on FidoNet, you could reach me from the Internet using the FidoNet address. This didn t support attachments, but then email of the day didn t really, either.

Various others outside Kansas ran FidoNet distribution points. I believe one of them was mgmtsys; my memory is quite vague, but I think they offered a direct gateway and I would call them to pick up Internet mail via FidoNet protocols, but I m not at all certain of this.

Pros and Cons of the Non-Microsoft World

As mentioned, Microsoft was and is the dominant operating system vendor for PCs. But I left that world in 1993, and here, nearly 30 years later, have never really returned. I got an operating system with more technical capabilities than the DOS and Windows of the day, but the tradeoff was a much smaller software ecosystem. OS/2 could run DOS programs, but it ran OS/2 programs a lot better. So if I were to run a BBS, I wanted one that had a native OS/2 version limiting me to a small fraction of available BBS server software. On the other hand, as a fully 32-bit operating system, there started to be OS/2 ports of certain software with a Unix heritage; most notably for me at the time, gcc. At some point, I eventually came across the RMS essays and started to be hooked.

Internet: The Hunt Begins

I certainly was aware that the Internet was out there and interesting. But the first problem was: how the heck do I get connected to the Internet?

Learning Link and Gopher

ISPs weren t really a thing; the first one in my area (though still a long-distance call) started in, I think, 1994. One service that one of my teachers got me hooked up with was Learning Link. Learning Link was a nationwide collaboration of PBS stations and schools, designed to build on the educational mission of PBS. The nearest Learning Link station was more than a 3-hour drive away but critically, they had a toll-free access number, and my teacher convinced them to let me use it. I connected via a terminal program and a modem, like with most other things. I don t remember much about it, but I do remember a very important thing it had: Gopher. That was my first experience with Gopher.

Learning Link was hosted by a Unix derivative (Xenix), but it didn t exactly give everyone a shell. I seem to recall it didn t have open FTP access either. The Gopher client had FTP access at some point; I don t recall for sure if it did then. If it did, then when a Gopher server referred to an FTP server, I could get to it. (I am unclear at this point if I could key in an arbitrary FTP location, or knew how, at that time.) I also had email access there, but I don t recall exactly how; probably Pine. If that s correct, that would have dated my Learning Link access as no earlier than 1992.

I think my access time to Learning Link was limited. And, since the only way to get out on the Internet from there was Gopher and Pine, I was somewhat limited in terms of technology as well. I believe that telnet services, for instance, weren t available to me.

Computer labs

There was one place that tended to have Internet access: colleges and universities. In 7th grade, I participated in a program that resulted in me being invited to visit Duke University, and in 8th grade, I participated in National History Day, resulting in a trip to visit the University of Maryland. I probably sought out computer labs at both of those. My most distinct memory was finding my way into a computer lab at one of those universities, and it was full of NeXT workstations. I had never seen or used NeXT before, and had no idea how to operate it. I had brought a box of floppy disks, unaware that the DOS disks probably weren t compatible with NeXT.

Closer to home, a small college had a computer lab that I could also visit. I would go there in summer or when it wasn t used with my stack of floppies. I remember downloading disk images of FLOSS operating systems: FreeBSD, Slackware, or Debian, at the time. The hash marks from the DOS-based FTP client would creep across the screen as the 1.44MB disk images would slowly download. telnet was also available on those machines, so I could telnet to things like public-access Archie servers and libraries though not Gopher. Still, FTP and telnet access opened up a lot, and I learned quite a bit in those years.

Continuing the Journey

At some point, I got a copy of the Whole Internet User s Guide and Catalog, published in 1994. I still have it. If it hadn t already figured it out by then, I certainly became aware from it that Unix was the dominant operating system on the Internet. The examples in Whole Internet covered FTP, telnet, gopher all assuming the user somehow got to a Unix prompt. The web was introduced about 300 pages in; clearly viewed as something that wasn t page 1 material. And it covered the command-line www client before introducing the graphical Mosaic. Even then, though, the book highlighted Mosaic s utility as a front-end for Gopher and FTP, and even the ability to launch telnet sessions by clicking on links. But having a copy of the book didn t equate to having any way to run Mosaic. The machines in the computer lab I mentioned above all ran DOS and were incapable of running a graphical browser. I had no SLIP or PPP (both ways to run Internet traffic over a modem) connectivity at home. In short, the Web was something for the large institutional users at the time.

CD-ROMs

As CD-ROMs came out, with their huge (for the day) 650MB capacity, various companies started collecting software that could be downloaded on the Internet and selling it on CD-ROM. The two most popular ones were Walnut Creek CD-ROM and Infomagic. One could buy extensive Shareware and gaming collections, and then even entire Linux and BSD distributions. Although not exactly an Internet service per se, it was a way of bringing what may ordinarily only be accessible to institutional users into the home computer realm.

Free Software Jumps In

As I mentioned, by the mid 90s, I had come across RMS s writings about free software most probably his 1992 essay Why Software Should Be Free. (Please note, this is not a commentary on the more recently-revealed issues surrounding RMS, but rather his writings and work as I encountered them in the 90s.) The notion of a Free operating system not just in cost but in openness was incredibly appealing. Not only could I tinker with it to a much greater extent due to having source for everything, but it included so much software that I d otherwise have to pay for. Compilers! Interpreters! Editors! Terminal emulators! And, especially, server software of all sorts. There d be no way I could afford or run Netware, but with a Free Unixy operating system, I could do all that. My interest was obviously piqued. Add to that the fact that I could actually participate and contribute I was about to become hooked on something that I ve stayed hooked on for decades.

But then the question was: which Free operating system? Eventually I chose FreeBSD to begin with; that would have been sometime in 1995. I don t recall the exact reasons for that. I remember downloading Slackware install floppies, and probably the fact that Debian wasn t yet at 1.0 scared me off for a time. FreeBSD s fantastic Handbook far better than anything I could find for Linux at the time was no doubt also a factor.

The de Raadt Factor

Why not NetBSD or OpenBSD? The short answer is Theo de Raadt. Somewhere in this time, when I was somewhere between 14 and 16 years old, I asked some questions comparing NetBSD to the other two free BSDs. This was on a NetBSD mailing list, but for some reason Theo saw it and got a flame war going, which CC d me. Now keep in mind that even if NetBSD had a web presence at the time, it would have been minimal, and I would have not all that unusually for the time had no way to access it. I was certainly not aware of the, shall we say, acrimony between Theo and NetBSD. While I had certainly seen an online flamewar before, this took on a different and more disturbing tone; months later, Theo randomly emailed me under the subject SLIME saying that I was, well, SLIME . I seem to recall periodic emails from him thereafter reminding me that he hates me and that he had blocked me. (Disclaimer: I have poor email archives from this period, so the full details are lost to me, but I believe I am accurately conveying these events from over 25 years ago)

This was a surprise, and an unpleasant one. I was trying to learn, and while it is possible I didn t understand some aspect or other of netiquette (or Theo s personal hatred of NetBSD) at the time, still that is not a reason to flame a 16-year-old (though he would have had no way to know my age). This didn t leave any kind of scar, but did leave a lasting impression; to this day, I am particularly concerned with how FLOSS projects handle poisonous people. Debian, for instance, has come a long way in this over the years, and even Linus Torvalds has turned over a new leaf. I don t know if Theo has.

In any case, I didn t use NetBSD then. I did try it periodically in the years since, but never found it compelling enough to justify a large switch from Debian. I never tried OpenBSD for various reasons, but one of them was that I didn t want to join a community that tolerates behavior such as Theo s from its leader.

Moving to FreeBSD

Moving from OS/2 to FreeBSD was final. That is, I didn t have enough hard drive space to keep both. I also didn t have the backup capacity to back up OS/2 completely. My BBS, which ran Virtual BBS (and at some point also AdeptXBBS) was deleted and reincarnated in a different form. My BBS was a member of both FidoNet and VirtualNet; the latter was specific to VBBS, and had to be dropped. I believe I may have also had to drop the FidoNet link for a time. This was the biggest change of computing in my life to that point. The earlier experiences hadn t literally destroyed what came before. OS/2 could still run my DOS programs. Its command shell was quite DOS-like. It ran Windows programs. I was going to throw all that away and leap into the unknown.

I wish I had saved a copy of my BBS; I would love to see the messages I exchanged back then, or see its menu screens again. I have little memory of what it looked like. But other than that, I have no regrets. Pursuing Free, Unixy operating systems brought me a lot of enjoyment and a good career.

That s not to say it was easy. All the problems of not being in the Microsoft ecosystem were magnified under FreeBSD and Linux. In a day before EDID, monitor timings had to be calculated manually and you risked destroying your monitor if you got them wrong. Word processing and spreadsheet software was pretty much not there for FreeBSD or Linux at the time; I was therefore forced to learn LaTeX and actually appreciated that. Software like PageMaker or CorelDraw was certainly nowhere to be found for those free operating systems either. But I got a ton of new capabilities.

I mentioned the BBS didn t shut down, and indeed it didn t. I ran what was surely a supremely unique oddity: a free, dialin Unix shell server in the middle of a small town in Kansas. I m sure I provided things such as pine for email and some help text and maybe even printouts for how to use it. The set of callers slowly grew over the time period, in fact.

And then I got UUCP.

Enter UUCP

Even throughout all this, there was no local Internet provider and things were still long distance. I had Internet Email access via assorted strange routes, but they were all strange. And, I wanted access to Usenet. In 1995, it happened.

The local ISP I mentioned offered UUCP access. Though I couldn t afford the dialup shell (or later, SLIP/PPP) that they offered due to long-distance costs, UUCP s very efficient batched processes looked doable. I believe I established that link when I was 15, so in 1995.

I worked to register my domain, complete.org, as well. At the time, the process was a bit lengthy and involved downloading a text file form, filling it out in a precise way, sending it to InterNIC, and probably mailing them a check. Well I did that, and in September of 1995, complete.org became mine. I set up sendmail on my local system, as well as INN to handle the limited Usenet newsfeed I requested from the ISP. I even ran Majordomo to host some mailing lists, including some that were surprisingly high-traffic for a few-times-a-day long-distance modem UUCP link!

The modem client programs for FreeBSD were somewhat less advanced than for OS/2, but I believe I wound up using Minicom or Seyon to continue to dial out to BBSs and, I believe, continue to use Learning Link. So all the while I was setting up my local BBS, I continued to have access to the text Internet, consisting of chiefly Gopher for me.

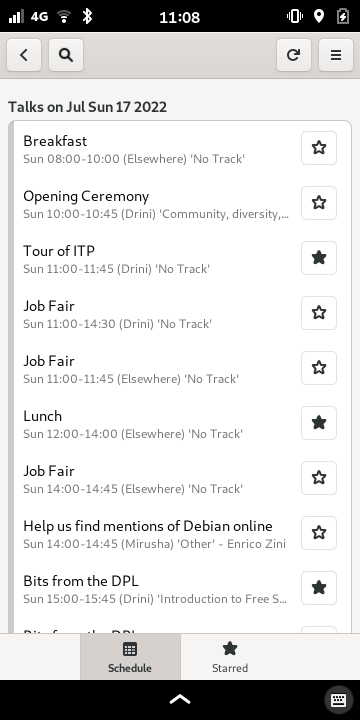

Switching to Debian

I switched to Debian sometime in 1995 or 1996, and have been using Debian as my primary OS ever since. I continued to offer shell access, but added the WorldVU Atlantis menuing BBS system. This provided a return of a more BBS-like interface (by default; shell was still an uption) as well as some BBS door games such as LoRD and TradeWars 2002, running under DOS emulation.

I also continued to run INN, and ran ifgate to allow FidoNet echomail to be presented into INN Usenet-like newsgroups, and netmail to be gated to Unix email. This worked pretty well. The BBS continued to grow in these days, peaking at about two dozen total user accounts, and maybe a dozen regular users.

Dial-up access availability

I believe it was in 1996 that dial up PPP access finally became available in my small town. What a thrill! FINALLY! I could now FTP, use Gopher, telnet, and the web all from home. Of course, it was at modem speeds, but still.

(Strangely, I have a memory of accessing the Web using WebExplorer from OS/2. I don t know exactly why; it s possible that by this time, I had upgraded to a 486 DX2/66 and was able to reinstall OS/2 on the old 25MHz 486, or maybe something was wrong with the timeline from my memories from 25 years ago above. Or perhaps I made the occasional long-distance call somewhere before I ditched OS/2.)

Gopher sites still existed at this point, and I could access them using Netscape Navigator which likely became my standard Gopher client at that point. I don t recall using UMN text-mode gopher client locally at that time, though it s certainly possible I did.

The city

Starting when I was 15, I took computer science classes at Wichita State University. The first one was a class in the summer of 1995 on C++. I remember being worried about being good enough for it I was, after all, just after my HS freshman year and had never taken the prerequisite C class. I loved it and got an A! By 1996, I was taking more classes.

In 1996 or 1997 I stayed in Wichita during the day due to having more than one class. So, what would I do then but enjoy the computer lab? The CS dept. had two of them: one that had NCD X terminals connected to a pair of SunOS servers, and another one running Windows. I spent most of the time in the Unix lab with the NCDs; I d use Netscape or pine, write code, enjoy the University s fast Internet connection, and so forth.

In 1997 I had graduated high school and that summer I moved to Wichita to attend college. As was so often the case, I shut down the BBS at that time. It would be 5 years until I again dealt with Internet at home in a rural community.

By the time I moved to my apartment in Wichita, I had stopped using OS/2 entirely. I have no memory of ever having OS/2 there. Along the way, I had bought a Pentium 166, and then the most expensive piece of computing equipment I have ever owned: a DEC Alpha, which, of course, ran Linux.

ISDN

I must have used dialup PPP for a time, but I eventually got a job working for the ISP I had used for UUCP, and then PPP. While there, I got a 128Kbps ISDN line installed in my apartment, and they gave me a discount on the service for it. That was around 3x the speed of a modem, and crucially was always on and gave me a public IP. No longer did I have to use UUCP; now I got to host my own things! By at least 1998, I was running a web server on www.complete.org, and I had an FTP server going as well.

Even Bigger Cities

In 1999 I moved to Dallas, and there got my first broadband connection: an ADSL link at, I think, 1.5Mbps! Now that was something! But it had some reliability problems. I eventually put together a server and had it hosted at an acquantaince s place who had SDSL in his apartment. Within a couple of years, I had switched to various kinds of proper hosting for it, but that is a whole other article.

In Indianapolis, I got a cable modem for the first time, with even tighter speeds but prohibitions on running servers on it. Yuck.

Challenges

Being non-Microsoft continued to have challenges. Until the advent of Firefox, a web browser was one of the biggest. While Netscape supported Linux on i386, it didn t support Linux on Alpha. I hobbled along with various attempts at emulators, old versions of Mosaic, and so forth. And, until StarOffice was open-sourced as Open Office, reading Microsoft file formats was also a challenge, though WordPerfect was briefly available for Linux.

Over the years, I have become used to the Linux ecosystem. Perhaps I use Gimp instead of Photoshop and digikam instead of well, whatever somebody would use on Windows. But I get ZFS, and containers, and so much that isn t available there.

Yes, I know Apple never went away and is a thing, but for most of the time period I discuss in this article, at least after the rise of DOS, it was niche compared to the PC market.

Back to Kansas

In 2002, I moved back to Kansas, to a rural home near a different small town in the county next to where I grew up. Over there, it was back to dialup at home, but I had faster access at work. I didn t much care for this, and thus began a 20+-year effort to get broadband in the country. At first, I got a wireless link, which worked well enough in the winter, but had serious problems in the summer when the trees leafed out. Eventually DSL became available locally highly unreliable, but still, it was something. Then I moved back to the community I grew up in, a few miles from where I grew up. Again I got DSL a bit better. But after some years, being at the end of the run of DSL meant I had poor speeds and reliability problems. I eventually switched to various wireless ISPs, which continues to the present day; while people in cities can get Gbps service, I can get, at best, about 50Mbps. Long-distance fees are gone, but the speed disparity remains.

Concluding Reflections

I am glad I grew up where I did; the strong community has a lot of advantages I don t have room to discuss here. In a number of very real senses, having no local services made things a lot more difficult than they otherwise would have been. However, perhaps I could say that I also learned a lot through the need to come up with inventive solutions to those challenges. To this day, I think a lot about computing in remote environments: partially because I live in one, and partially because I enjoy visiting places that are remote enough that they have no Internet, phone, or cell service whatsoever. I have written articles like Tools for Communicating Offline and in Difficult Circumstances based on my own personal experience. I instinctively think about making protocols robust in the face of various kinds of connectivity failures because I experience various kinds of connectivity failures myself.

(Almost) Everything Lives On

In 2002, Gopher turned 10 years old. It had probably been about 9 or 10 years since I had first used Gopher, which was the first way I got on live Internet from my house. It was hard to believe. By that point, I had an always-on Internet link at home and at work. I had my Alpha, and probably also at least PCMCIA Ethernet for a laptop (many laptops had modems by the 90s also). Despite its popularity in the early 90s, less than 10 years after it came on the scene and started to unify the Internet, it was mostly forgotten.

And it was at that moment that I decided to try to resurrect it. The University of Minnesota finally released it under an Open Source license. I wrote the first new gopher server in years, pygopherd, and introduced gopher to Debian. Gopher lives on; there are now quite a few Gopher clients and servers out there, newly started post-2002. The Gemini protocol can be thought of as something akin to Gopher 2.0, and it too has a small but blossoming ecosystem.

Archie, the old FTP search tool, is dead though. Same for WAIS and a number of the other pre-web search tools. But still, even FTP lives on today.

And BBSs? Well, they didn t go away either. Jason Scott s fabulous BBS documentary looks back at the history of the BBS, while Back to the BBS from last year talks about the modern BBS scene. FidoNet somehow is still alive and kicking. UUCP still has its place and has inspired a whole string of successors. Some, like NNCP, are clearly direct descendents of UUCP. Filespooler lives in that ecosystem, and you can even see UUCP concepts in projects as far afield as Syncthing and Meshtastic. Usenet still exists, and you can now run Usenet over NNCP just as I ran Usenet over UUCP back in the day (which you can still do as well). Telnet, of course, has been largely supplanted by ssh, but the concept is more popular now than ever, as Linux has made ssh be available on everything from Raspberry Pi to Android.

And I still run a Gopher server, looking pretty much like it did in 2002.

This post also has a permanent home on my website, where it may be periodically updated.

Three Different Worlds

There were sort of three different worlds of computing experience in the 80s:

- Home users. Initially using a wide variety of software from Apple, Commodore, Tandy/RadioShack, etc., but eventually coming to be mostly dominated by IBM PC clones

- Small and mid-sized business users. Some of them had larger minicomputers or small mainframes, but most that I had contact with by the early 90s were standardized on DOS-based PCs. More advanced ones had a network running Netware, most commonly. Networking hardware and software was generally too expensive for home users to use in the early days.

- Universities and large institutions. These are the places that had the mainframes, the earliest implementations of TCP/IP, the earliest users of UUCP, and so forth.

The difference between the home computing experience and the large institution experience were vast. Not only in terms of dollars the large institution hardware could easily cost anywhere from tens of thousands to millions of dollars but also in terms of sheer resources required (large rooms, enormous power circuits, support staff, etc). Nothing was in common between them; not operating systems, not software, not experience. I was never much aware of the third category until the differences started to collapse in the mid-90s, and even then I only was exposed to it once the collapse was well underway.

You might say to me, Well, Google certainly isn t running what I m running at home! And, yes of course, it s different. But fundamentally, most large datacenters are running on x86_64 hardware, with Linux as the operating system, and a TCP/IP network. It s a different scale, obviously, but at a fundamental level, the hardware and operating system stack are pretty similar to what you can readily run at home. Back in the 80s and 90s, this wasn t the case. TCP/IP wasn t even available for DOS or Windows until much later, and when it was, it was a clunky beast that was difficult.

One of the things Kevin Driscoll highlights in his book called Modem World see my short post about it is that the history of the Internet we usually receive is focused on case 3: the large institutions. In reality, the Internet was and is literally a network of networks. Gateways to and from Internet existed from all three kinds of users for years, and while TCP/IP ultimately won the battle of the internetworking protocol, the other two streams of users also shaped the Internet as we now know it. Like many, I had no access to the large institution networks, but as I ve been reflecting on my experiences, I ve found a new appreciation for the way that those of us that grew up with primarily home PCs shaped the evolution of today s online world also.

An Era of Scarcity

I should take a moment to comment about the cost of software back then. A newspaper article from 1985 comments that WordPerfect, then the most powerful word processing program, sold for $495 (or $219 if you could score a mail order discount). That s $1360/$600 in 2022 money. Other popular software, such as Lotus 1-2-3, was up there as well. If you were to buy a new PC clone in the mid to late 80s, it would often cost $2000 in 1980s dollars. Now add a printer a low-end dot matrix for $300 or a laser for $1500 or even more. A modem: another $300. So the basic system would be $3600, or $9900 in 2022 dollars. If you wanted a nice printer, you re now pushing well over $10,000 in 2022 dollars.

You start to see one barrier here, and also why things like shareware and piracy if it was indeed even recognized as such were common in those days.

So you can see, from a home computer setup (TRS-80, Commodore C64, Apple ][, etc) to a business-class PC setup was an order of magnitude increase in cost. From there to the high-end minis/mainframes was another order of magnitude (at least!) increase. Eventually there was price pressure on the higher end and things all got better, which is probably why the non-DOS PCs lasted until the early 90s.

Increasing Capabilities

My first exposure to computers in school was in the 4th grade, when I would have been about 9. There was a single Apple ][ machine in that room. I primarily remember playing Oregon Trail on it. The next year, the school added a computer lab. Remember, this is a small rural area, so each graduating class might have about 25 people in it; this lab was shared by everyone in the K-8 building. It was full of some flavor of IBM PS/2 machines running DOS and Netware. There was a dedicated computer teacher too, though I think she was a regular teacher that was given somewhat minimal training on computers. We were going to learn typing that year, but I did so well on the very first typing program that we soon worked out that I could do programming instead. I started going to school early these machines were far more powerful than the XT at home and worked on programming projects there.

Eventually my parents bought me a Gateway 486SX/25 with a VGA monitor and hard drive. Wow! This was a whole different world. It may have come with Windows 3.0 or 3.1 on it, but I mainly remember running OS/2 on that machine. More on that below.

Programming

That CoCo II came with a BASIC interpreter in ROM. It came with a large manual, which served as a BASIC tutorial as well. The BASIC interpreter was also the shell, so literally you could not use the computer without at least a bit of BASIC.

Once I had access to a DOS machine, it also had a basic interpreter: GW-BASIC. There was a fair bit of software written in BASIC at the time, but most of the more advanced software wasn t. I wondered how these .EXE and .COM programs were written. I could find vague references to DEBUG.EXE, assemblers, and such. But it wasn t until I got a copy of Turbo Pascal that I was able to do that sort of thing myself. Eventually I got Borland C++ and taught myself C as well. A few years later, I wanted to try writing GUI programs for Windows, and bought Watcom C++ much cheaper than the competition, and it could target Windows, DOS (and I think even OS/2).

Notice that, aside from BASIC, none of this was free, and none of it was bundled. You couldn t just download a C compiler, or Python interpreter, or whatnot back then. You had to pay for the ability to write any kind of serious code on the computer you already owned.

The Microsoft Domination

Microsoft came to dominate the PC landscape, and then even the computing landscape as a whole. IBM very quickly lost control over the hardware side of PCs as Compaq and others made clones, but Microsoft has managed in varying degrees even to this day to keep a stranglehold on the software, and especially the operating system, side. Yes, there was occasional talk of things like DR-DOS, but by and large the dominant platform came to be the PC, and if you had a PC, you ran DOS (and later Windows) from Microsoft.

For awhile, it looked like IBM was going to challenge Microsoft on the operating system front; they had OS/2, and when I switched to it sometime around the version 2.1 era in 1993, it was unquestionably more advanced technically than the consumer-grade Windows from Microsoft at the time. It had Internet support baked in, could run most DOS and Windows programs, and had introduced a replacement for the by-then terrible FAT filesystem: HPFS, in 1988. Microsoft wouldn t introduce a better filesystem for its consumer operating systems until Windows XP in 2001, 13 years later. But more on that story later.

Free Software, Shareware, and Commercial Software

I ve covered the high cost of software already. Obviously $500 software wasn t going to sell in the home market. So what did we have?

Mainly, these things:

- Public domain software. It was free to use, and if implemented in BASIC, probably had source code with it too.

- Shareware

- Commercial software (some of it from small publishers was a lot cheaper than $500)

Let s talk about shareware. The idea with shareware was that a company would release a useful program, sometimes limited. You were encouraged to register , or pay for, it if you liked it and used it. And, regardless of whether you registered it or not, were told please copy! Sometimes shareware was fully functional, and registering it got you nothing more than printed manuals and an easy conscience (guilt trips for not registering weren t necessarily very subtle). Sometimes unregistered shareware would have a nag screen a delay of a few seconds while they told you to register. Sometimes they d be limited in some way; you d get more features if you registered. With games, it was popular to have a trilogy, and release the first episode inevitably ending with a cliffhanger as shareware, and the subsequent episodes would require registration. In any event, a lot of software people used in the 80s and 90s was shareware. Also pirated commercial software, though in the earlier days of computing, I think some people didn t even know the difference.

Notice what s missing: Free Software / FLOSS in the Richard Stallman sense of the word. Stallman lived in the big institution world after all, he worked at MIT and what he was doing with the Free Software Foundation and GNU project beginning in 1983 never really filtered into the DOS/Windows world at the time. I had no awareness of it even existing until into the 90s, when I first started getting some hints of it as a port of gcc became available for OS/2. The Internet was what really brought this home, but I m getting ahead of myself.

I want to say again: FLOSS never really entered the DOS and Windows 3.x ecosystems. You d see it make a few inroads here and there in later versions of Windows, and moreso now that Microsoft has been sort of forced to accept it, but still, reflect on its legacy. What is the software market like in Windows compared to Linux, even today?

Now it is, finally, time to talk about connectivity!

Getting On-Line

What does it even mean to get on line? Certainly not connecting to a wifi access point. The answer is, unsurprisingly, complex. But for everyone except the large institutional users, it begins with a telephone.

The telephone system

By the 80s, there was one communication network that already reached into nearly every home in America: the phone system. Virtually every household (note I don t say every person) was uniquely identified by a 10-digit phone number. You could, at least in theory, call up virtually any other phone in the country and be connected in less than a minute.

But I ve got to talk about cost. The way things worked in the USA, you paid a monthly fee for a phone line. Included in that monthly fee was unlimited local calling. What is a local call? That was an extremely complex question. Generally it meant, roughly, calling within your city. But of course, as you deal with things like suburbs and cities growing into each other (eg, the Dallas-Ft. Worth metroplex), things got complicated fast. But let s just say for simplicity you could call others in your city.

What about calling people not in your city? That was long distance , and you paid often hugely by the minute for it. Long distance rates were difficult to figure out, but were generally most expensive during business hours and cheapest at night or on weekends. Prices eventually started to come down when competition was introduced for long distance carriers, but even then you often were stuck with a single carrier for long distance calls outside your city but within your state. Anyhow, let s just leave it at this: local calls were virtually free, and long distance calls were extremely expensive.

Getting a modem

I remember getting a modem that ran at either 1200bps or 2400bps. Either way, quite slow; you could often read even plain text faster than the modem could display it. But what was a modem?

A modem hooked up to a computer with a serial cable, and to the phone system. By the time I got one, modems could automatically dial and answer. You would send a command like ATDT5551212 and it would dial 555-1212. Modems had speakers, because often things wouldn t work right, and the telephone system was oriented around speech, so you could hear what was happening. You d hear it wait for dial tone, then dial, then hopefully the remote end would ring, a modem there would answer, you d hear the screeching of a handshake, and eventually your terminal would say CONNECT 2400. Now your computer was bridged to the other; anything going out your serial port was encoded as sound by your modem and decoded at the other end, and vice-versa.

But what, exactly, was the other end?

It might have been another person at their computer. Turn on local echo, and you can see what they did. Maybe you d send files to each other. But in my case, the answer was different: PC Magazine.

PC Magazine and CompuServe

Starting around 1986 (so I would have been about 6 years old), I got to read PC Magazine. My dad would bring copies that were being discarded at his office home for me to read, and I think eventually bought me a subscription directly. This was not just a standard magazine; it ran something like 350-400 pages an issue, and came out every other week. This thing was a monster. It had reviews of hardware and software, descriptions of upcoming technologies, pages and pages of ads (that often had some degree of being informative to them). And they had sections on programming. Many issues would talk about BASIC or Pascal programming, and there d be a utility in most issues. What do I mean by a utility in most issues ? Did they include a floppy disk with software?

No, of course not. There was a literal program listing printed in the magazine. If you wanted the utility, you had to type it in. And a lot of them were written in assembler, so you had to have an assembler. An assembler, of course, was not free and I didn t have one. Or maybe they wrote it in Microsoft C, and I had Borland C, and (of course) they weren t compatible. Sometimes they would list the program sort of in binary: line after line of a BASIC program, with lines like 64, 193, 253, 0, 53, 0, 87 that you would type in for hours, hopefully correctly. Running the BASIC program would, if you got it correct, emit a .COM file that you could then run. They did have a rudimentary checksum system built in, but it wasn t even a CRC, so something like swapping two numbers you d never notice except when the program would mysteriously hang.

Eventually they teamed up with CompuServe to offer a limited slice of CompuServe for the purpose of downloading PC Magazine utilities. This was called PC MagNet. I am foggy on the details, but I believe that for a time you could connect to the limited PC MagNet part of CompuServe for free (after the cost of the long-distance call, that is) rather than paying for CompuServe itself (because, OF COURSE, that also charged you per the minute.) So in the early days, I would get special permission from my parents to place a long distance call, and after some nerve-wracking minutes in which we were aware every minute was racking up charges, I could navigate the menus, download what I wanted, and log off immediately.

I still, incidentally, mourn what PC Magazine became. As with computing generally, it followed the mass market. It lost its deep technical chops, cut its programming columns, stopped talking about things like how SCSI worked, and so forth. By the time it stopped printing in 2009, it was no longer a square-bound 400-page beheamoth, but rather looked more like a copy of Newsweek, but with less depth.

Continuing with CompuServe

CompuServe was a much larger service than just PC MagNet. Eventually, our family got a subscription. It was still an expensive and scarce resource; I d call it only after hours when the long-distance rates were cheapest. Everyone had a numerical username separated by commas; mine was 71510,1421. CompuServe had forums, and files. Eventually I would use TapCIS to queue up things I wanted to do offline, to minimize phone usage online.

CompuServe eventually added a gateway to the Internet. For the sum of somewhere around $1 a message, you could send or receive an email from someone with an Internet email address! I remember the thrill of one time, as a kid of probably 11 years, sending a message to one of the editors of PC Magazine and getting a kind, if brief, reply back!

But inevitably I had

The Godzilla Phone Bill

Yes, one month I became lax in tracking my time online. I ran up my parents phone bill. I don t remember how high, but I remember it was hundreds of dollars, a hefty sum at the time. As I watched Jason Scott s BBS Documentary, I realized how common an experience this was. I think this was the end of CompuServe for me for awhile.

Toll-Free Numbers

I lived near a town with a population of 500. Not even IN town, but near town. The calling area included another town with a population of maybe 1500, so all told, there were maybe 2000 people total I could talk to with a local call though far fewer numbers, because remember, telephones were allocated by the household. There was, as far as I know, zero modems that were a local call (aside from one that belonged to a friend I met in around 1992). So basically everything was long-distance.

But there was a special feature of the telephone network: toll-free numbers. Normally when calling long-distance, you, the caller, paid the bill. But with a toll-free number, beginning with 1-800, the recipient paid the bill. These numbers almost inevitably belonged to corporations that wanted to make it easy for people to call. Sales and ordering lines, for instance. Some of these companies started to set up modems on toll-free numbers. There were few of these, but they existed, so of course I had to try them!

One of them was a company called PennyWise that sold office supplies. They had a toll-free line you could call with a modem to order stuff. Yes, online ordering before the web! I loved office supplies. And, because I lived far from a big city, if the local K-Mart didn t have it, I probably couldn t get it. Of course, the interface was entirely text, but you could search for products and place orders with the modem. I had loads of fun exploring the system, and actually ordered things from them and probably actually saved money doing so. With the first order they shipped a monster full-color catalog. That thing must have been 500 pages, like the Sears catalogs of the day. Every item had a part number, which streamlined ordering through the modem.

Inbound FAXes

By the 90s, a number of modems became able to send and receive FAXes as well. For those that don t know, a FAX machine was essentially a special modem. It would scan a page and digitally transmit it over the phone system, where it would at least in the early days be printed out in real time (because the machines didn t have the memory to store an entire page as an image). Eventually, PC modems integrated FAX capabilities.

There still wasn t anything useful I could do locally, but there were ways I could get other companies to FAX something to me. I remember two of them.

One was for US Robotics. They had an on demand FAX system. You d call up a toll-free number, which was an automated IVR system. You could navigate through it and select various documents of interest to you: spec sheets and the like. You d key in your FAX number, hang up, and US Robotics would call YOU and FAX you the documents you wanted. Yes! I was talking to a computer (of a sorts) at no cost to me!

The New York Times also ran a service for awhile called TimesFax. Every day, they would FAX out a page or two of summaries of the day s top stories. This was pretty cool in an era in which I had no other way to access anything from the New York Times. I managed to sign up for TimesFax I have no idea how, anymore and for awhile I would get a daily FAX of their top stories. When my family got its first laser printer, I could them even print these FAXes complete with the gothic New York Times masthead. Wow! (OK, so technically I could print it on a dot-matrix printer also, but graphics on a 9-pin dot matrix is a kind of pain that is a whole other article.)

My own phone line

Remember how I discussed that phone lines were allocated per household? This was a problem for a lot of reasons:

- Anybody that tried to call my family while I was using my modem would get a busy signal (unable to complete the call)

- If anybody in the house picked up the phone while I was using it, that would degrade the quality of the ongoing call and either mess up or disconnect the call in progress. In many cases, that could cancel a file transfer (which wasn t necessarily easy or possible to resume), prompting howls of annoyance from me.

- Generally we all had to work around each other

So eventually I found various small jobs and used the money I made to pay for my own phone line and my own long distance costs. Eventually I upgraded to a 28.8Kbps US Robotics Courier modem even! Yes, you heard it right: I got a job and a bank account so I could have a phone line and a faster modem. Uh, isn t that why every teenager gets a job?

Now my local friend and I could call each other freely at least on my end (I can t remember if he had his own phone line too). We could exchange files using HS/Link, which had the added benefit of allowing split-screen chat even while a file transfer is in progress. I m sure we spent hours chatting to each other keyboard-to-keyboard while sharing files with each other.

Technology in Schools

By this point in the story, we re in the late 80s and early 90s. I m still using PC-style OSs at home; OS/2 in the later years of this period, DOS or maybe a bit of Windows in the earlier years. I mentioned that they let me work on programming at school starting in 5th grade. It was soon apparent that I knew more about computers than anybody on staff, and I started getting pulled out of class to help teachers or administrators with vexing school problems. This continued until I graduated from high school, incidentally often to my enjoyment, and the annoyance of one particular teacher who, I must say, I was fine with annoying in this way.